|

Research and Publications

My research interests are broadly in the intersection of AI, robotics, and control, spanning the entire spectrum from theory and foundations, algorithm design, to real-world general-purpose robotic systems such as humanoids. I develop reliable, adaptive, and efficient learning and control methods for generalist robots with agility. See my publications and research topics for more details.

|

|

|

|

Misc.

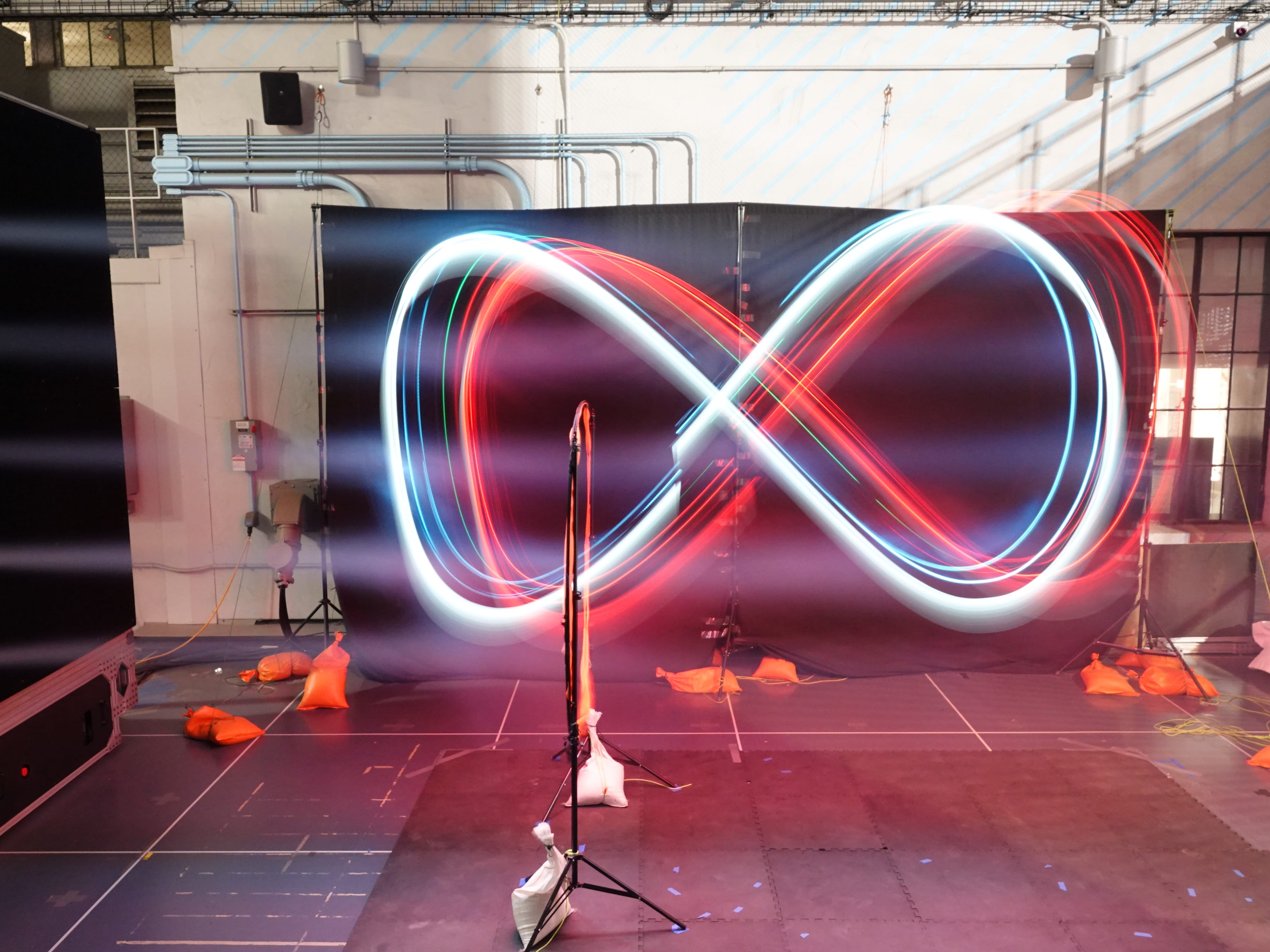

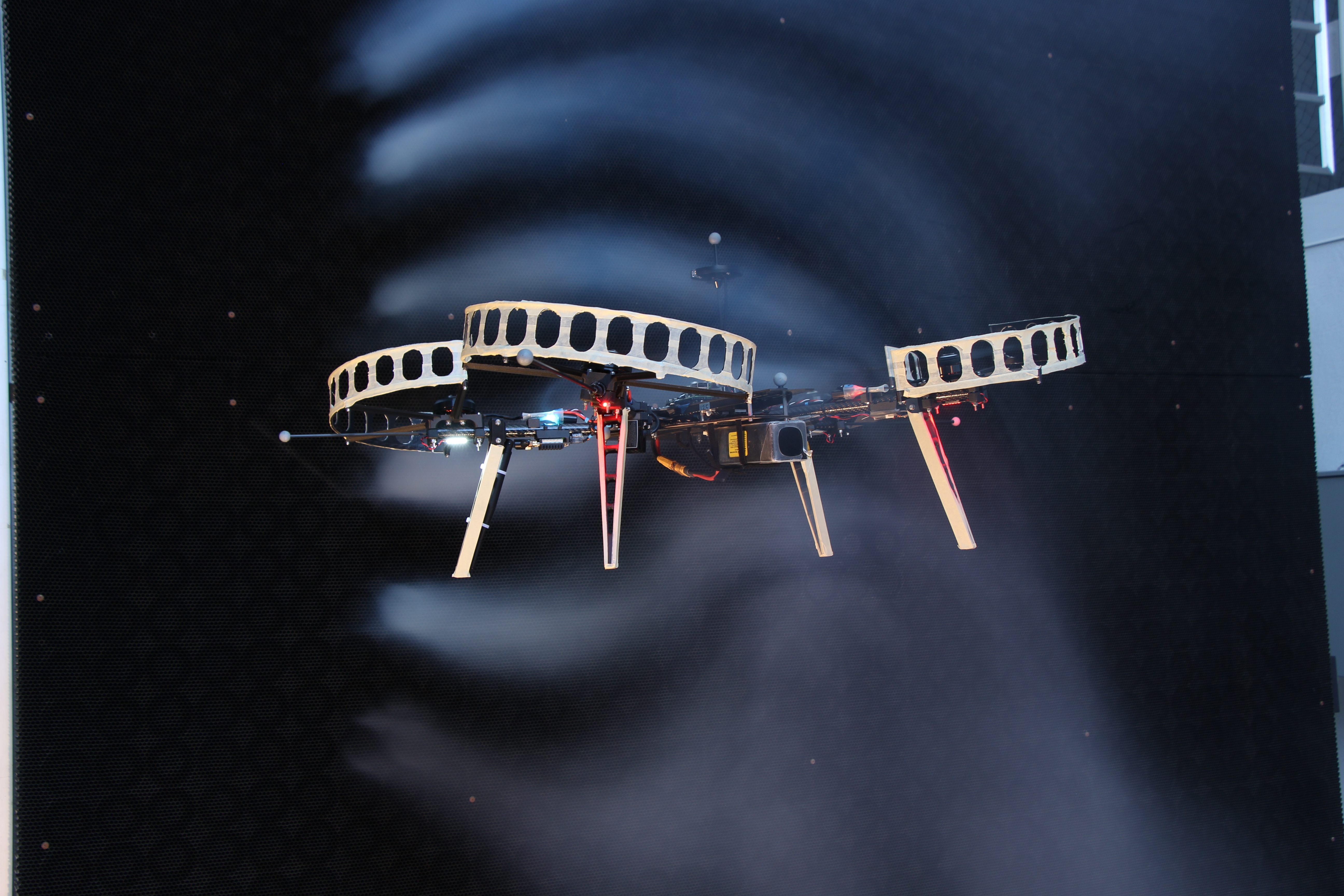

I love playing basketball, soccer and MOBA games. I am also very interested in photography, hiking, travelling and cooking. Here are some photos taken by me.

|

| A drone flying in the Caltech Real Weather Wind Tunnel (Neural-Fly project) |

| Beijing National Stadium |

Tokugawaen, Nagoya, Japan |

|